🆓 Get Free 1-Year ChatGPT GO Membership & How to Keep It Active

Complete guide to get free ChatGPT GO 1-year membership and maintain it without cancellation

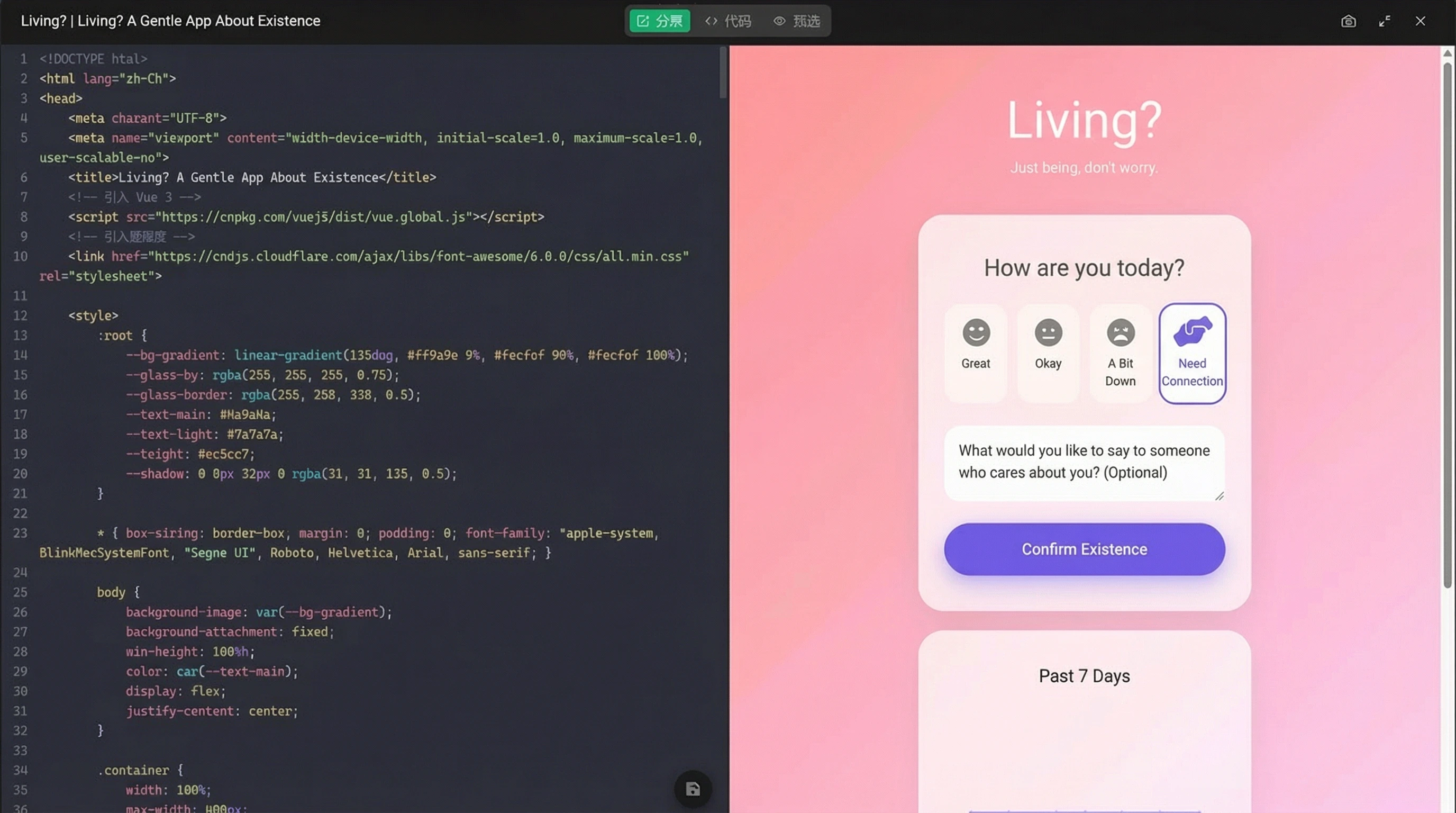

Do you also want to get free 1-year ChatGPT GO membership, unlock more powerful AI assistant, but don't know where to start? Actually, many people have seen Baidu's tutorials before, can get free ChatGPT GO annual meal through VPN and changing location, and through PayPal account. But after using it for a few days, it gets cancelled. Why is this?

Today I'll help everyone solve this problem

We'll use the latest method to test, see if we can get free 1-year ChatGPT GO membership without PayPal account! At the same time, I'll introduce the usual precautions for daily use!

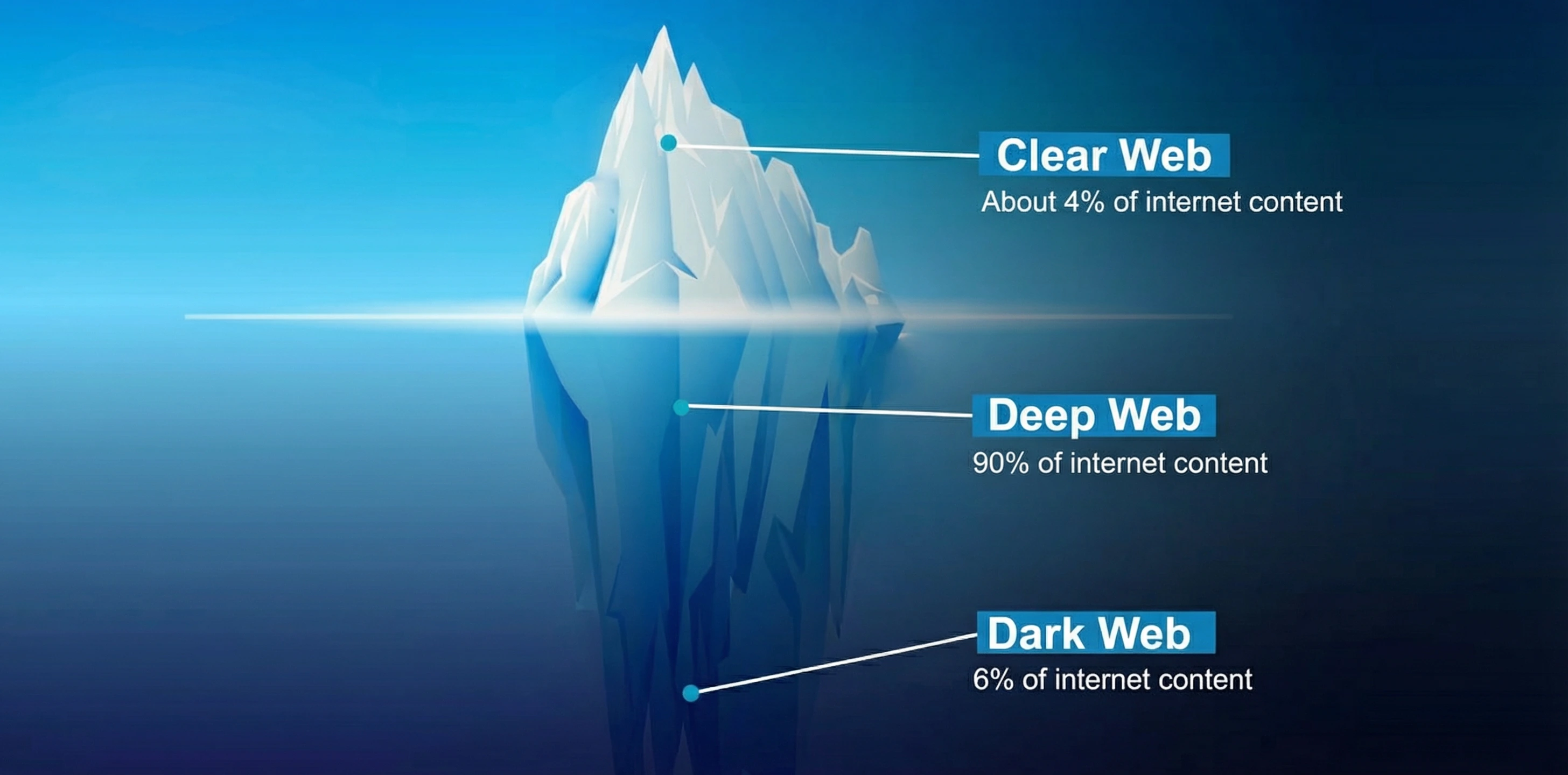

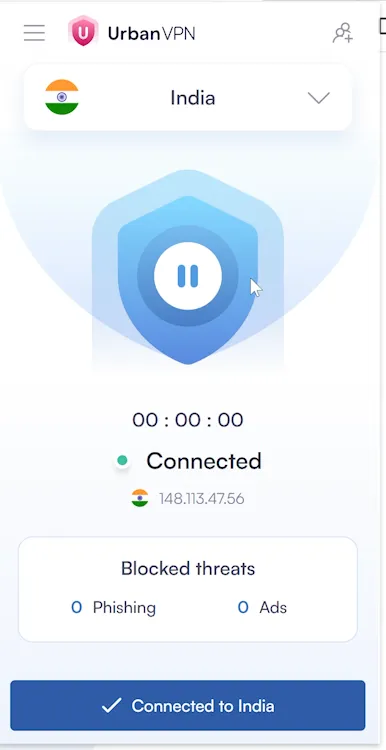

🌍 Step 1: Switch to Indian IP Address

Why Indian IP is Required

We need to prepare an Indian VPN, this is an essential prerequisite. Click here to install free Indian node VPN. Of course, to increase availability, it's recommended to use paid VPN Click here to get Surfshark.

They both have mobile apps, direct installation is more convenient!

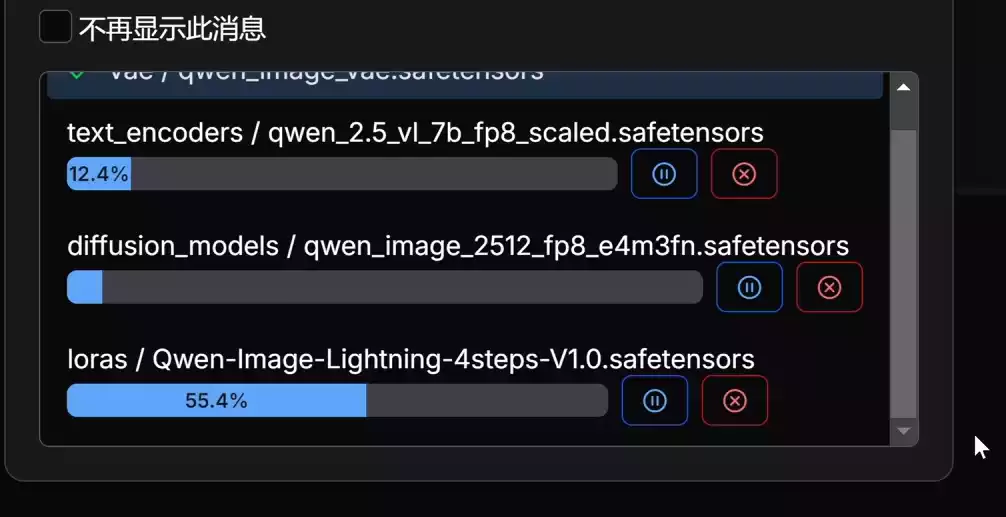

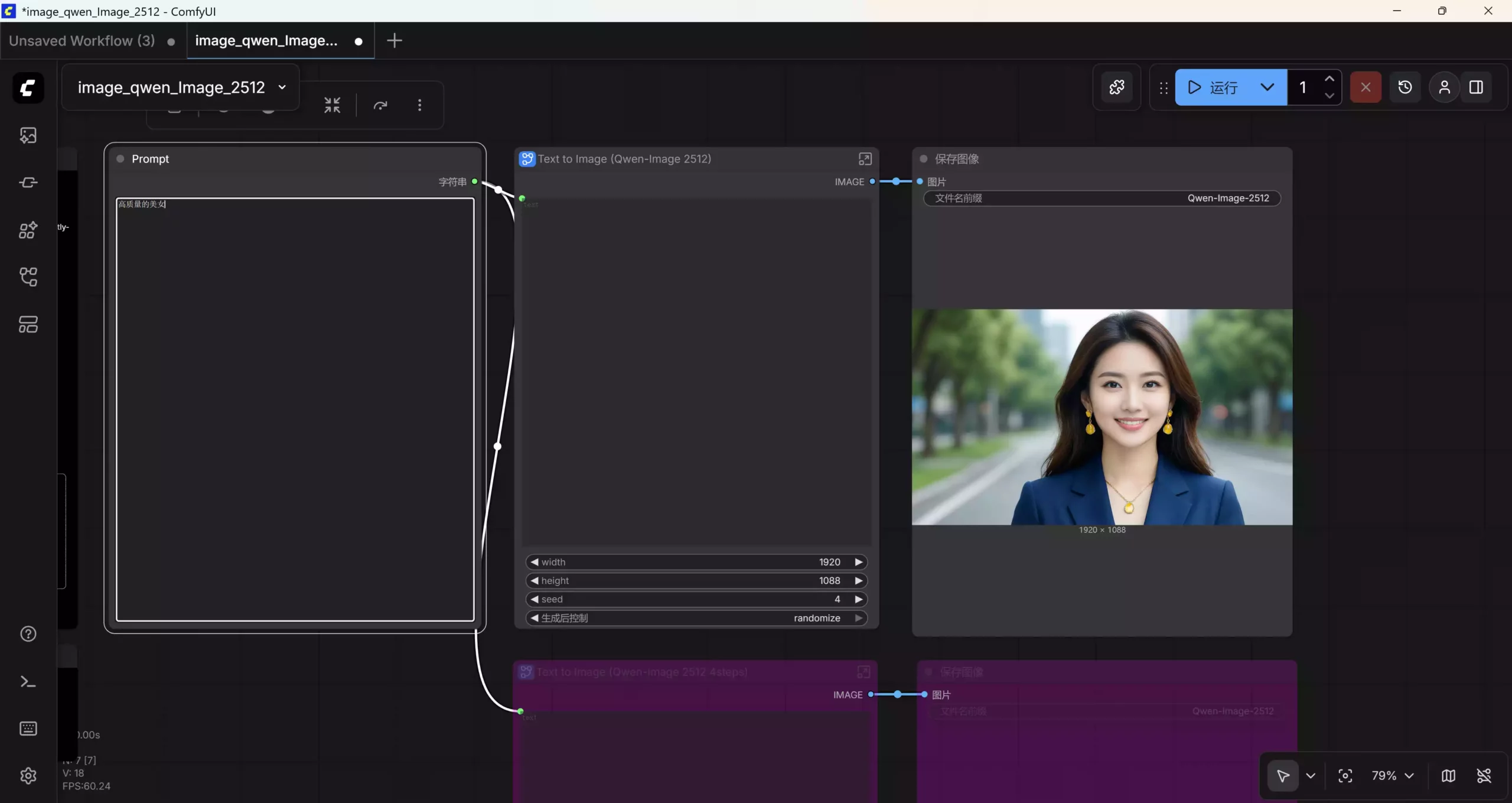

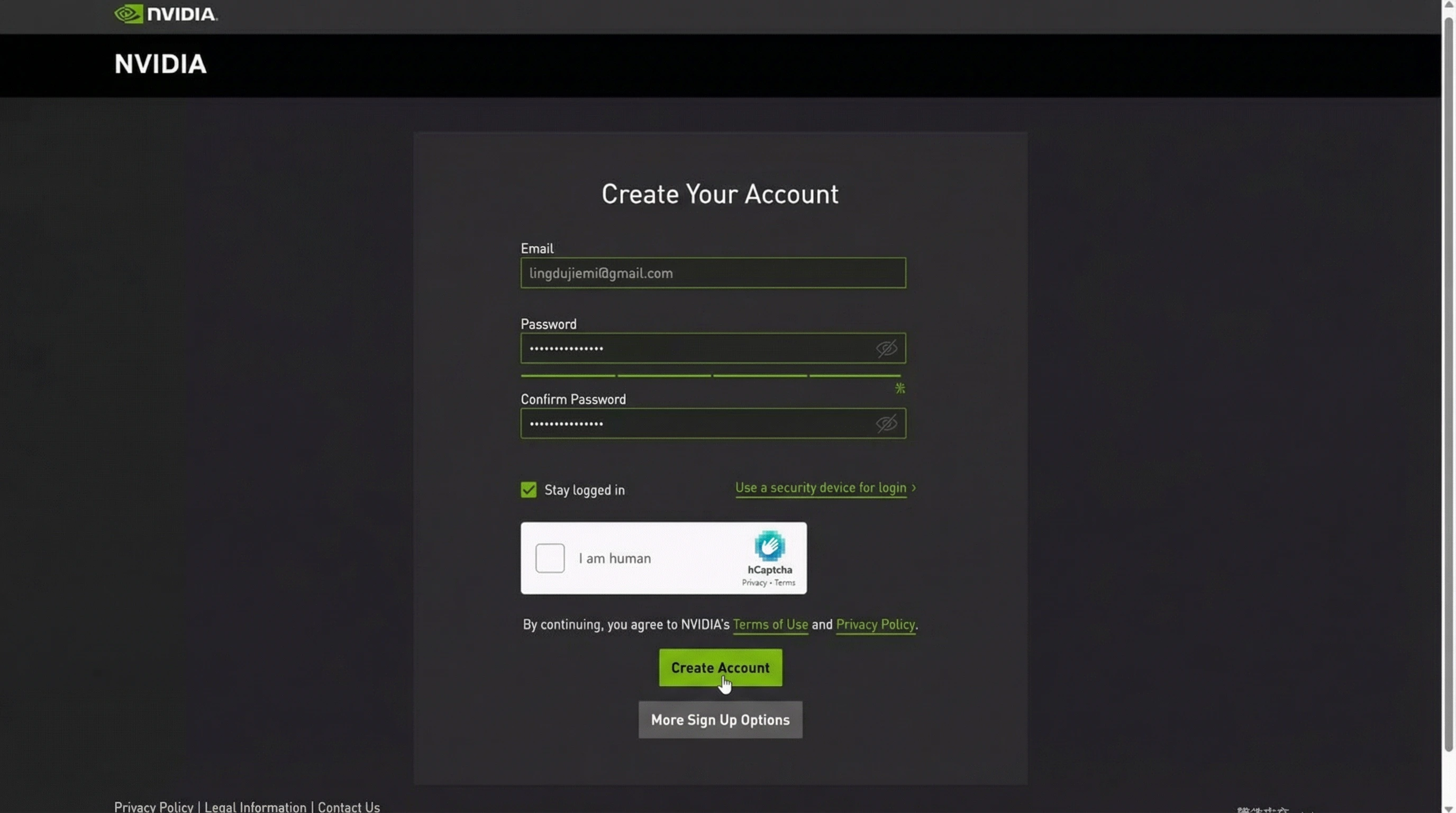

Step 2: Register on ChatGPT Official Website

After switching to Indian IP, then enter ChatGPT official website, you can register a new account, or use an old account (Foo IT Zone uses an old account).

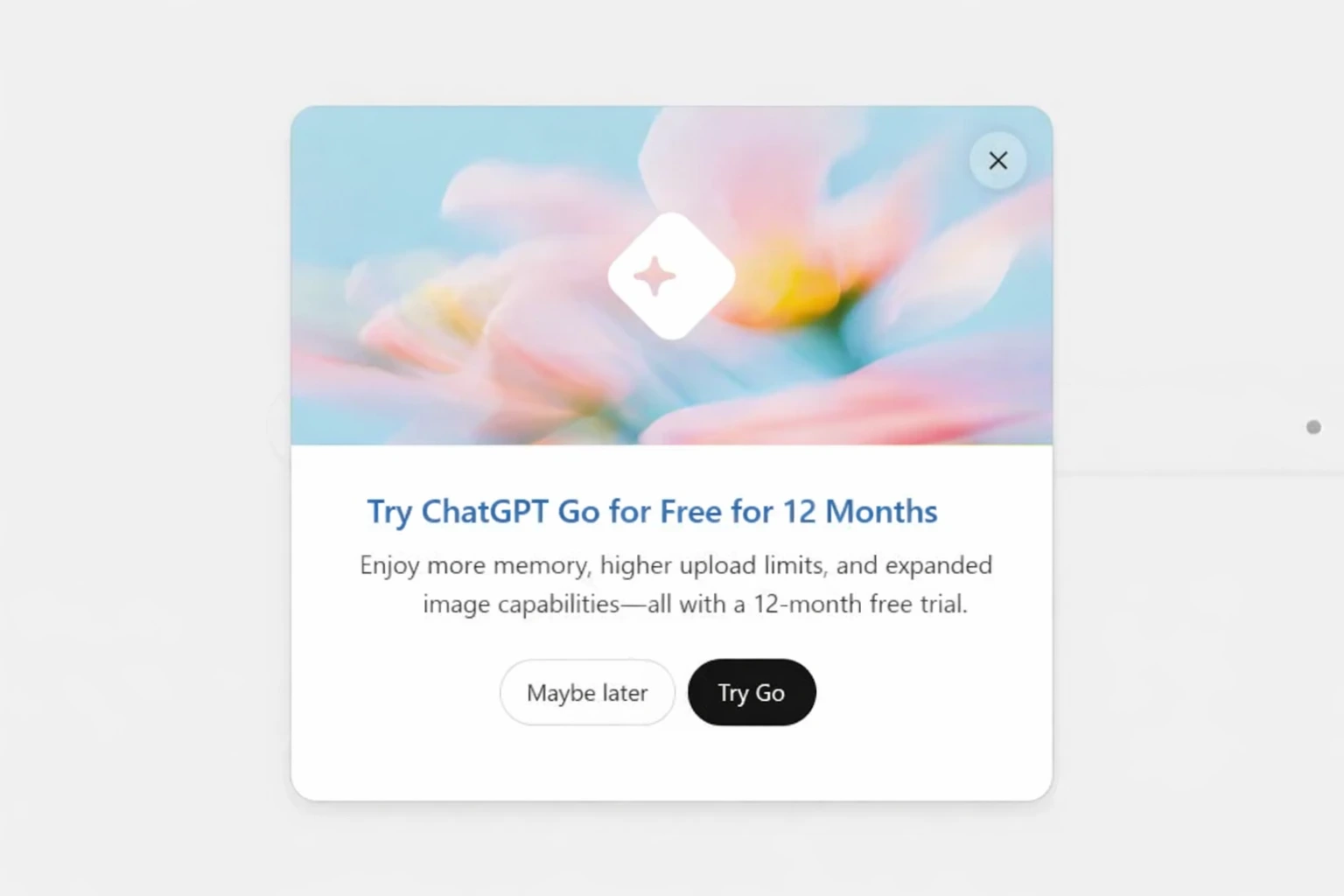

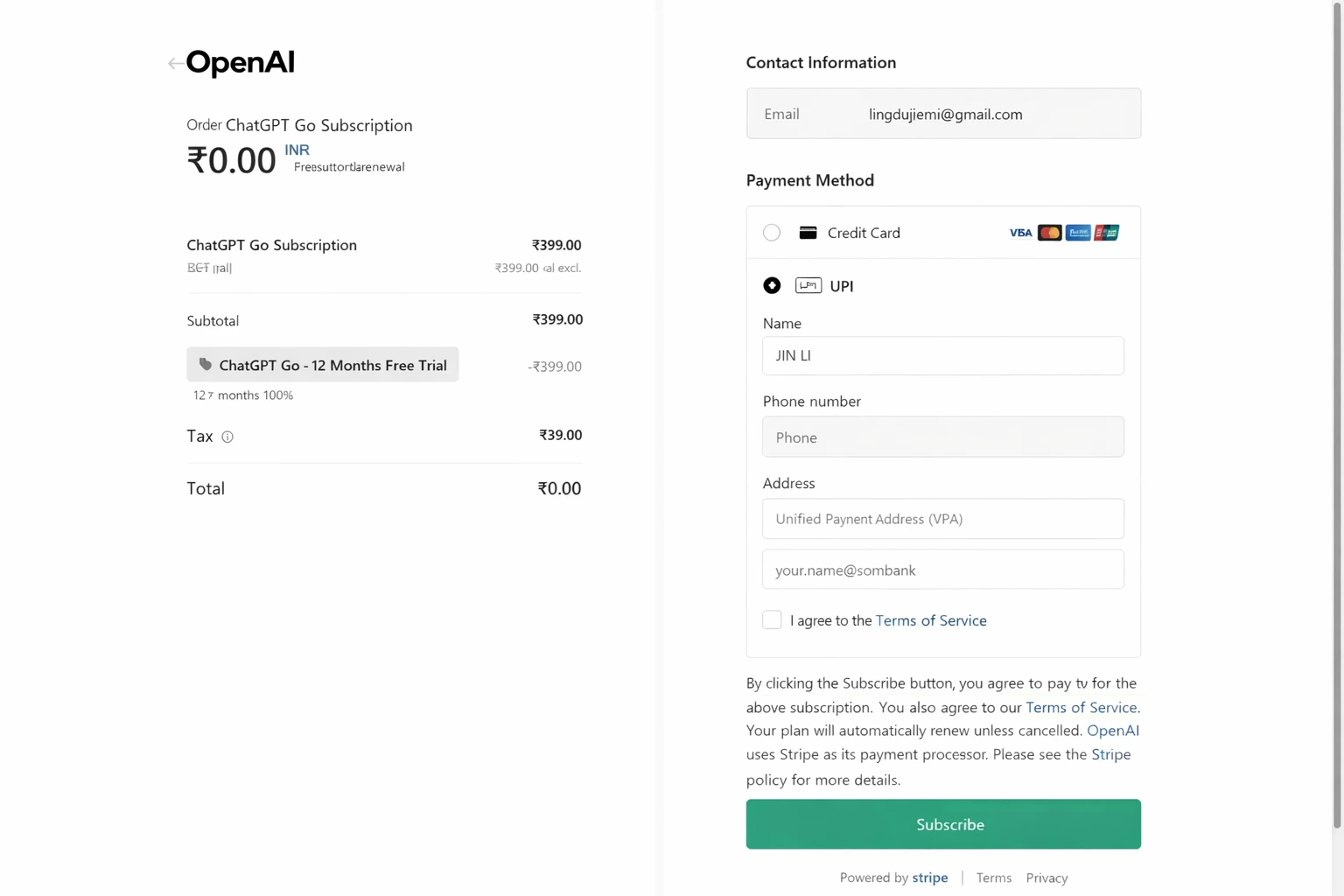

After entering, you can see free trial ChatGPT for 12 months at the top, you can see "Free Gift" or "Activate Plus" prompt, click to see the following page:

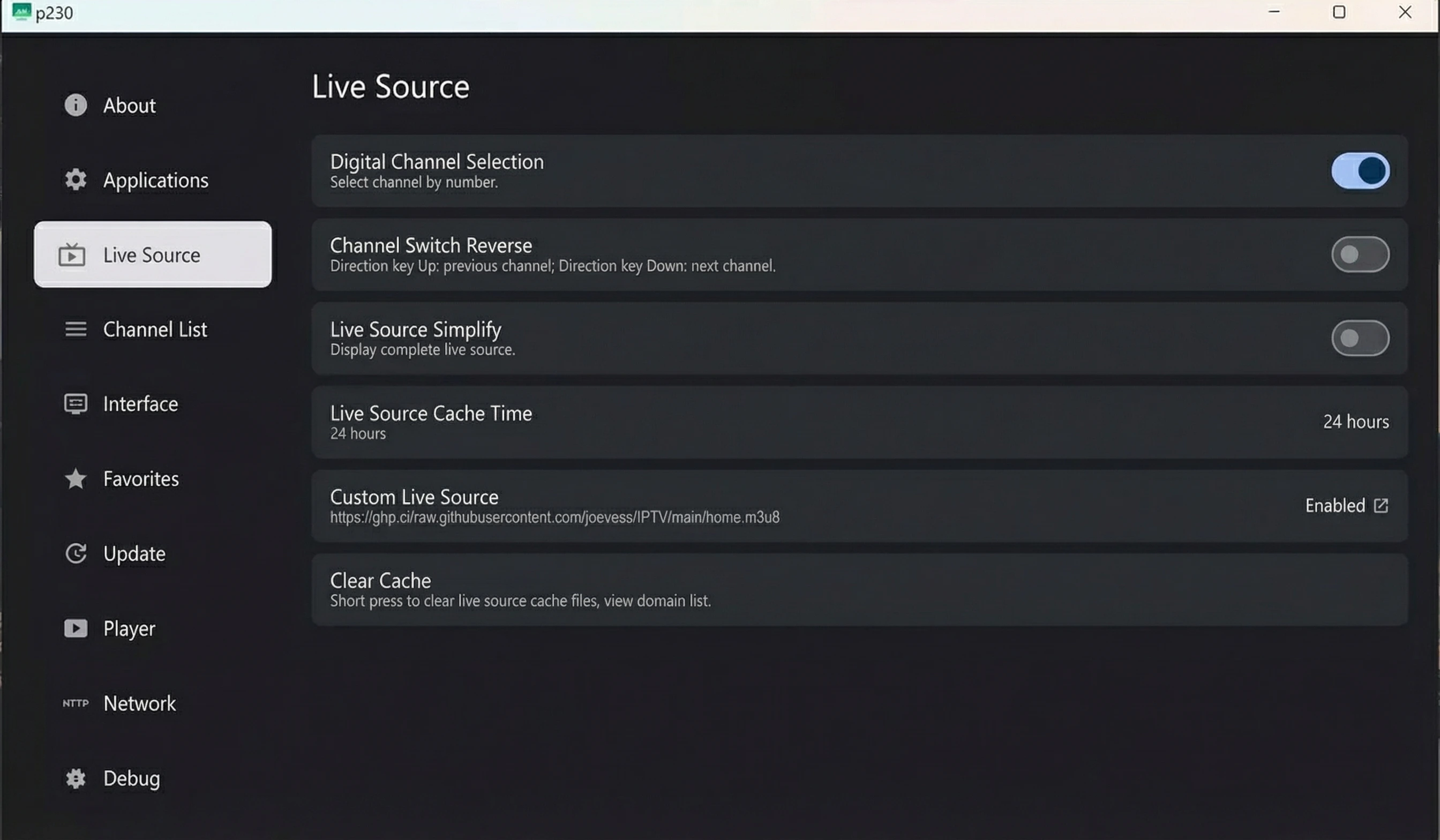

Step 3: Select GO Plan

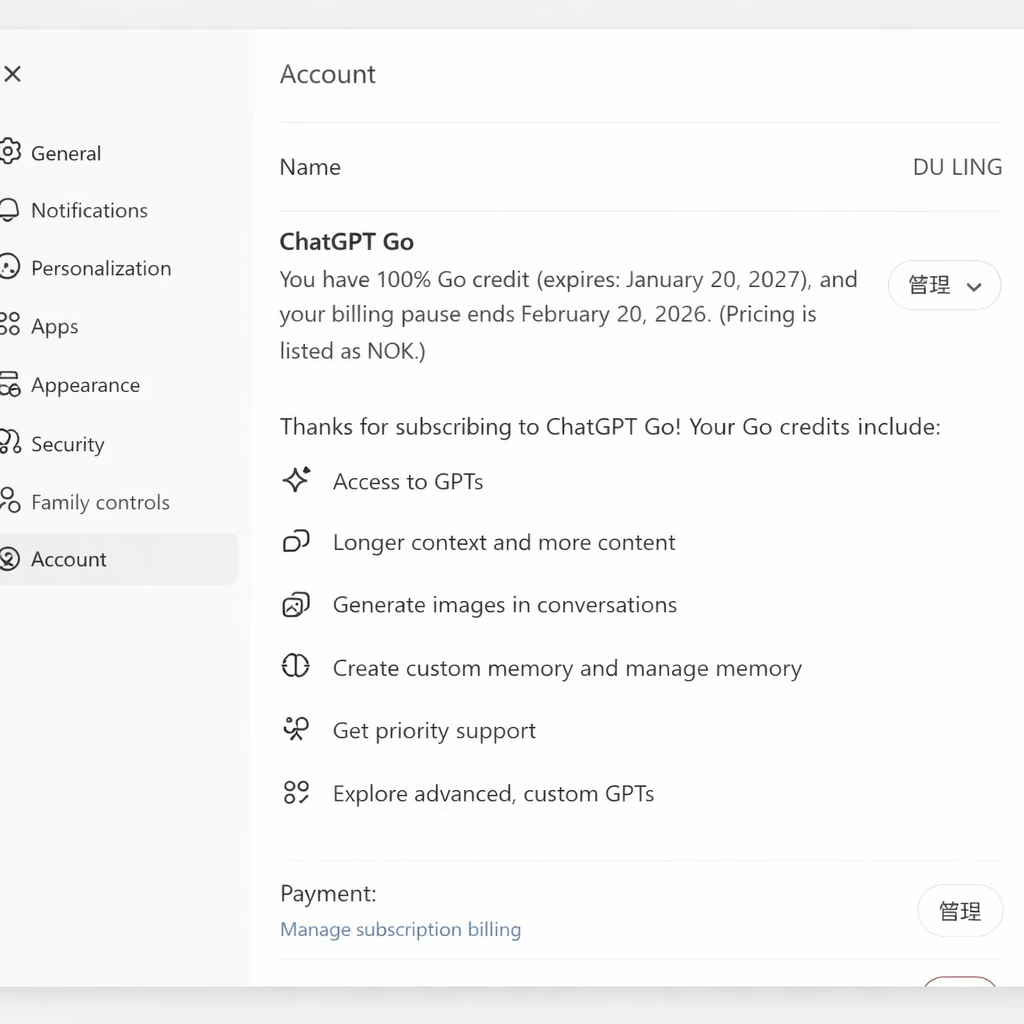

Choose GO meal, original price is 399 rupees but now it's 0 rupees,说明 you can enjoy this 1-year discount package. If you don't see the above page, it means your IP address is not pure enough, or your ChatGPT is a new account,建议 changing account to try. It's recommended to use UnionPay credit card,建议 using your real card (doesn't need to be Indian), also no need to switch regions, activate directly!

Important Notes for Stability

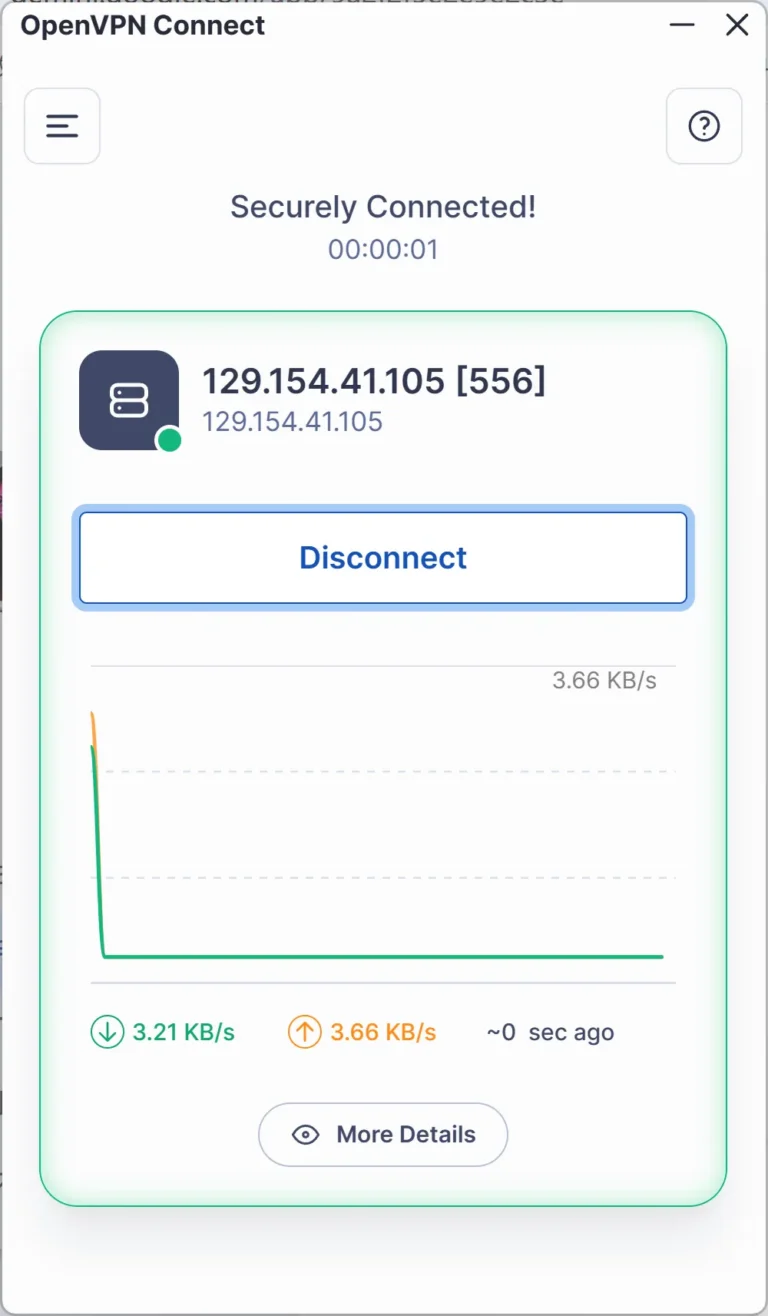

To avoid being cancelled like before, using it for a few days and then GO membership gets cancelled, so a stable Indian node VPN is essential. Whether you're on PC or mobile, when using ChatGPT GO paid account, always keep the Indian node VPN connected. Free is certainly nice, but unstable, so you can choose paid VPN, need to have Indian nodes, such as Surfshark or ProtonVPN both are available! They both have mobile apps, direct installation is more convenient!

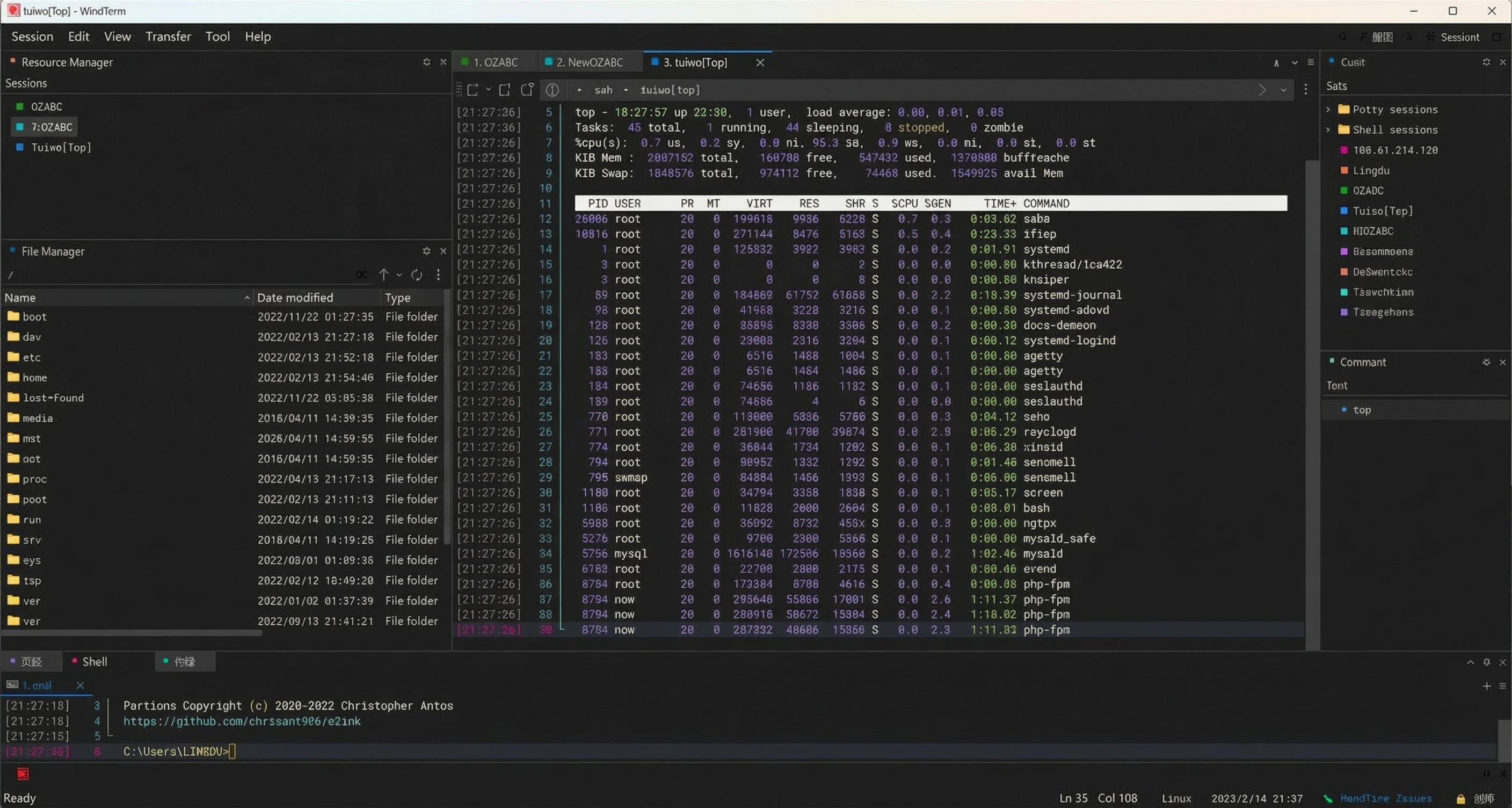

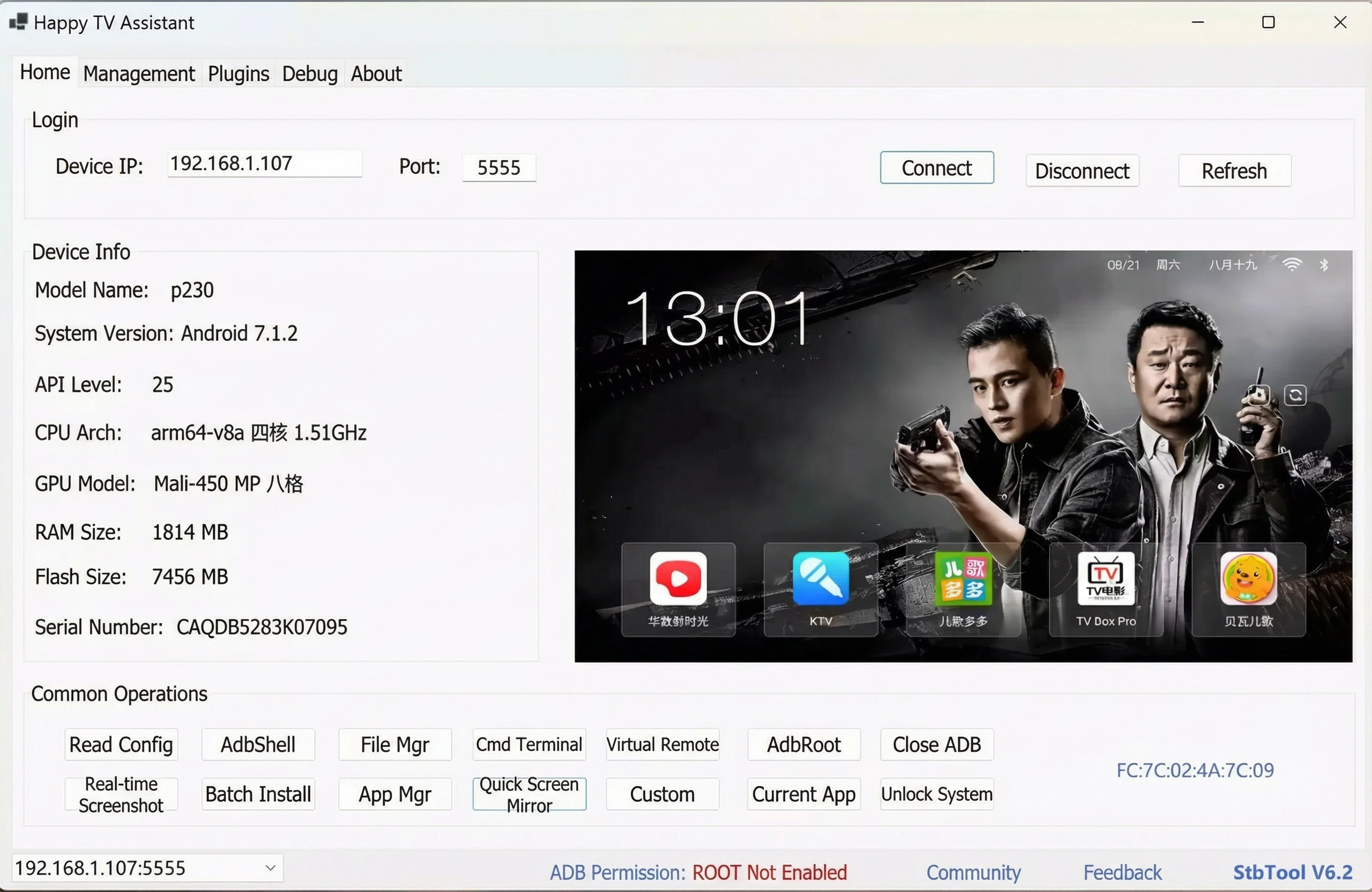

Or you can be like me, have a permanent free Oracle Cloud Server, then deploy OpenVPN or WireGuard inside, you can permanently get a free Indian proxy node.

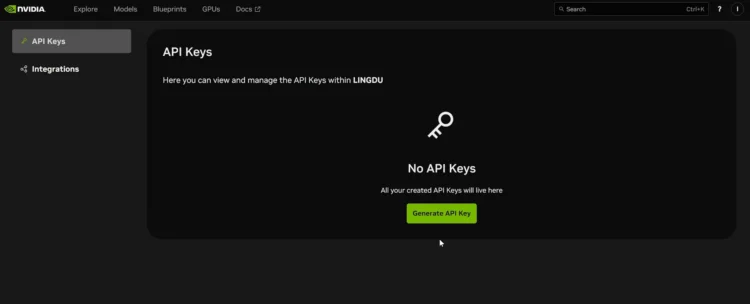

🛠️ Alternative: Free Oracle Cloud Server Method

OpenVPN One-Click Installation

wget https://git.io/vpn -O openvpn-install.sh && bash openvpn-install.sh

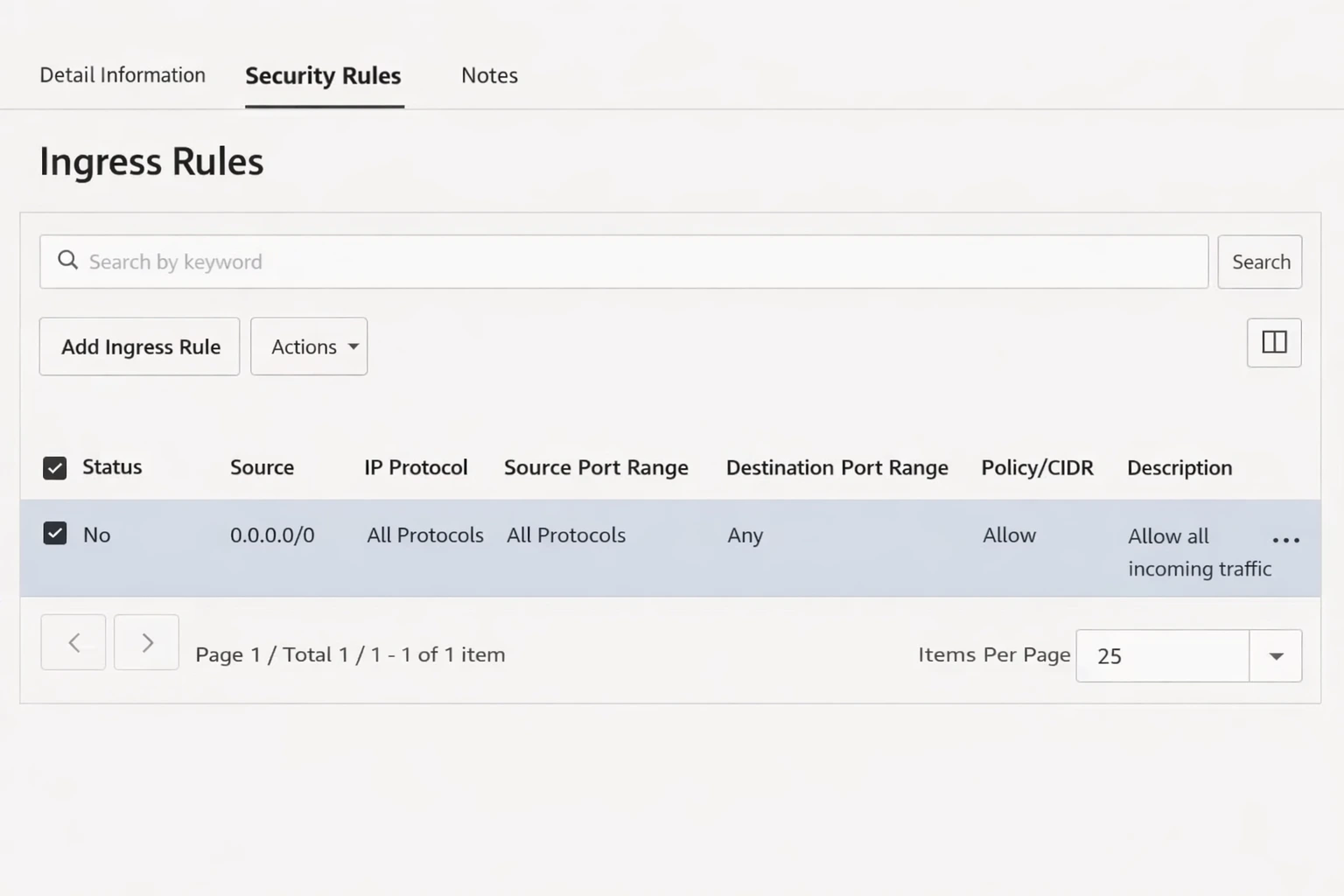

OCI Firewall Port Configuration

In OCI console, add to Network Security Group (NSG) or Security List:

- Protocol: UDP

- Port: 51820

- Source: 0.0.0.0/0

Or directly open all ports to save trouble each time!

Ubuntu System Configuration

Open all ports:

iptables -P INPUT ACCEPT

iptables -P FORWARD ACCEPT

iptables -P OUTPUT ACCEPT

iptables -F

Ubuntu mirror sets iptables rules by default, disable it:

apt-get purge netfilter-persistent

reboot

Or force delete:

rm -rf /etc/iptables && reboot

WireGuard Installation (Better Security)

Or install WireGuard, security encryption is better, suitable for special users:

Ubuntu / Debian:

apt update

apt install -y wireguard qrencode

Generate Keys

wg genkey | tee server_private.key | wg pubkey > server_public.key

wg genkey | tee client_private.key | wg pubkey > client_public.key

View public keys:

cat server_public.key

cat client_public.key

View private keys:

cat server_private.key

cat client_private.key

Server Configuration

Create configuration file:

sudo nano /etc/wireguard/wg0.conf

[Interface]

Address = 10.8.0.1/24

ListenPort = 51820

PrivateKey = server private key content

PostUp = iptables -A FORWARD -i wg0 -j ACCEPT; iptables -A FORWARD -o wg0 -j ACCEPT; iptables -t nat -A POSTROUTING -o eth0 -j MASQUERADE

PostDown = iptables -D FORWARD -i wg0 -j ACCEPT; iptables -D FORWARD -o wg0 -j ACCEPT; iptables -t nat -D POSTROUTING -o eth0 -j MASQUERADE

[Peer]

PublicKey = client public key content

AllowedIPs = 10.8.0.2/32

Note: Modify the network card inside: eth0 (everyone's is different). eth0 might not be your network card name, you can use ip a to check (OCI is usually ens3 or enp0s3).

If it's ens3, please change all eth0 above to ens3.

Enable IP Forwarding

sudo nano /etc/sysctl.conf

Uncomment or add:

net.ipv4.ip_forward=1

Then execute:

sudo sysctl -p

Start WireGuard Service

sudo systemctl enable wg-quick@wg0

sudo systemctl start wg-quick@wg0

Client Configuration (Windows / iOS / Android / macOS)

Client configuration example:

[Interface]

PrivateKey = client private key

Address = 10.8.0.2/32

DNS = 1.1.1.1

[Peer]

PublicKey = server public key

Endpoint = your server public IP:51820

AllowedIPs = 0.0.0.0/0

PersistentKeepalive = 25

Completely free • Stable connection • No PayPal required • Permanent solution

_a_use_back_this_image-812026.webp)